What you should know: I am currently working for Orbit Cloud Solutions as Cloud Advisor, but any posts on this blog reflect my own views and opinions only.

One situation i experienced recently with OCI was the lack of support for hybrid DNS. A typical use case for this is the use of private DNS zones for giving nicer names to the servers in you private network never meant to be visible to the world, therefore not supposed to be included in your public DNS records. So like /etc/hosts but with less messy maintenance.

Finally there is a proper solution for private DNS on Oracle OCI. This makes the workarounds described in my posts obsolete.

Both Azure and AWS will let you have hybrid DNS, but Oracle OCI is missing this feature. Or at lease i am not aware of such a solution at the current date. But of course there is some workaround/solution for this, as described by Oracles A-Team in their blog post on hybrid DNS. Basically what they do is adding dnsmasq services to forward DNS queries to the proper DNS servers for querying private DNS zones. In my post i will describe an example for a setup in terraform.

You can find my source code for this example on github.

Overview

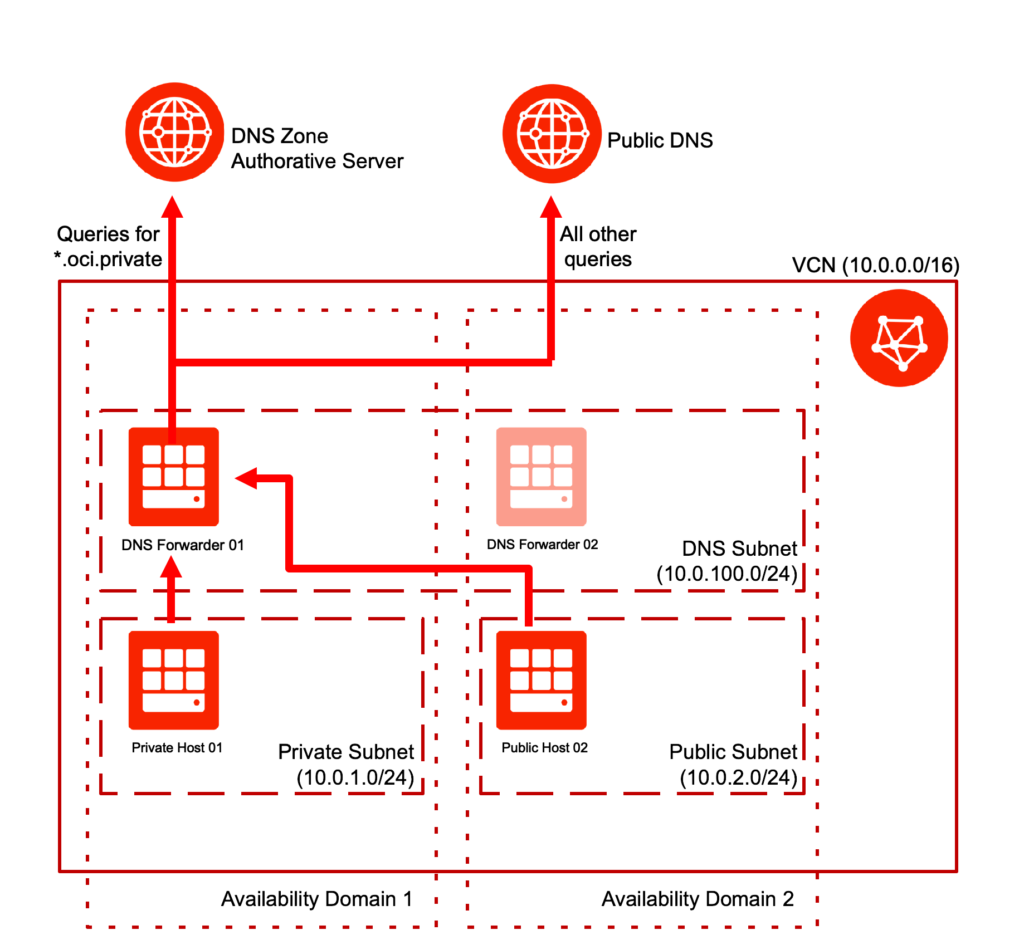

For the Private DNS zones, we will use a setup of 2 redundant DNS forwarders in separate availability domains. This is a poor mans solution, you might add a load balancer in front of the DNS forwarders to get better HA characteristics.

Hosts that use the name resolving provided with the private DNS zones then will use these DNS forwarders as nameserver. If they try to resolve a name from the private DNS zone the forwarder will query the authorative DNS server for this zone, all other queries will be handed over to the DNS server provided for public DNS in OCI.

Basic Infrastructure

First we need to create the basic infrastructure that is used for the further setup. Basically we create a VCN (10.0.0.0/16), an internet gateway and a route for traffic between the VCN and public internet. Nothing fancy here.

resource "oci_core_virtual_network" "pdns_vcn" {

cidr_block = "${var.oci_cidr_vcn}"

dns_label = "pdnsvcn"

compartment_id = "${var.oci_compartment_ocid}"

display_name = "pdns-vcn"

}

resource "oci_core_internet_gateway" "pdns_igw" {

display_name = "pdns-internet-gateway"

compartment_id = "${var.oci_compartment_ocid}"

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

}

resource "oci_core_default_route_table" "default_route_table" {

manage_default_resource_id = "${oci_core_virtual_network.pdns_vcn.default_route_table_id}"

route_rules {

network_entity_id = "${oci_core_internet_gateway.pdns_igw.id}"

destination = "0.0.0.0/0"

}

}

Private DNS Zone

Now we can add a DNS zone that will be handling the private DNS records created later. As the zone name must be unique to the set of authorative DNS servers used in OCI, you need to change that name from oci.private to some other private domain you find suitable. Else you will likely run into some error due to name clashes.

variable "private_dns_domain" { default = "oci.private"}

resource "oci_dns_zone" "pdns_zone" {

compartment_id = "${var.oci_compartment_ocid}"

name = "${var.private_dns_domain}"

zone_type = "PRIMARY"

}

DNS forwarders

Now it is time to add the DNS forwarders. They will be put into a special subnet using the 10.0.100.0/24 IP range. This is important as DHCP options are set on the subnet level and the forwarders will be set as DNS servers for other subnets using the private DNS records. Since the forwarders are used for DNS, we need to add port 53 (DNS) for the VCN IP range to a security list.

resource "oci_core_subnet" "dns_forward_subnet" {

cidr_block = "${var.oci_cidr_forward_subnet}"

compartment_id = "${var.oci_compartment_ocid}"

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

display_name = "dns-forward-subnet"

dns_label = "dnsfwsubnet"

security_list_ids = ["${oci_core_security_list.pdns_sl.id}"]

}

resource "oci_core_security_list" "pdns_sl" {

compartment_id = "${var.oci_compartment_ocid}"

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

display_name = "pdns-security-list"

ingress_security_rules {

source = "0.0.0.0/0"

protocol = "1"

}

ingress_security_rules {

source = "0.0.0.0/0"

protocol = "6"

tcp_options {

min = "22"

max = "22"

}

}

ingress_security_rules {

source = "${var.oci_cidr_vcn}"

protocol = "6"

tcp_options {

min = "53"

max = "53"

}

}

ingress_security_rules {

source = "${var.oci_cidr_vcn}"

protocol = "17"

udp_options {

min = "53"

max = "53"

}

}

egress_security_rules {

destination = "0.0.0.0/0"

protocol = "all"

}

}

Now we can add the DNS forwarder hosts. Since these hosts require some basic setup for dnsmasq, we will use cloud-init for creating the dnsmasq.conf file, installing the service and opening port 53. This happens when the host is created. For this the following template file is used.

#cloud-config

write_files:

# create dnsmasq config

- path: /etc/dnsmasq.conf

content: |

server=/${private_domain}/${zone_dns_1}

server=/${private_domain}/${zone_dns_2}

cache-size=0

runcmd:

# Run firewall commands to open DNS (udp/53)

- firewall-offline-cmd --zone=public --add-port=53/udp

# install dnsmasq package

- yum install dnsmasq -y

# enable dnsmasq process

- systemctl enable dnsmasq

# restart dnsmasq process

- systemctl restart dnsmasq

# restart firewalld

- systemctl restart firewalld

The variables private_domain, zone_dns_1 and zone_dns_2 will be evaluated before the template is applied. For the latter the authorative nameservers for the DNS zone created above will be queried.

data "dns_a_record_set" "ns1" {

host = "${lookup(oci_dns_zone.pdns_zone.nameservers[0],"hostname","")}"

}

data "dns_a_record_set" "ns2" {

host = "${lookup(oci_dns_zone.pdns_zone.nameservers[1],"hostname","")}"

}

data "template_file" "dnsmasq" {

template = "${file("templates/dnsmasq.tpl")}"

vars {

private_domain = "${var.private_dns_domain}"

zone_dns_1 = "${join("",data.dns_a_record_set.ns1.addrs)}"

zone_dns_2 = "${join("",data.dns_a_record_set.ns2.addrs)}"

}

}

The most interesting thing now probably is the user_data attribute in the metadata section that refers to the template. The second instance will be created just alike, just that another availability domain (AD2) is used.

resource "oci_core_instance" "dns_forward_instance_01" {

availability_domain = "${lookup(data.oci_identity_availability_domains.pdns_ads.availability_domains[0],"name")}"

compartment_id = "${var.oci_compartment_ocid}"

shape = "VM.Standard2.1"

create_vnic_details {

subnet_id = "${oci_core_subnet.dns_forward_subnet.id}"

assign_public_ip = true

skip_source_dest_check = true

}

display_name = "dnsforward-01"

metadata {

ssh_authorized_keys = "${var.ssh_public_key}"

user_data = "${base64encode(data.template_file.dnsmasq.rendered)}"

}

source_details {

source_id = "${var.oci_base_image}"

source_type = "image"

}

preserve_boot_volume = false

}

Now everything should be in place to create the hosts and take a quick look at them. We see that /etc/resolv.conf still points to the default DNS server for OCI, while /etc/dnsmasq.conf contains the authorative DNS servers for the private DNS zone.

Adding some hosts for testing

For testing now one private and one public subnet is created, in which the hosts will be put. Before creating the subnets, we will add DHCP options to use out DNS forwarders as nameservers. As a fallback solution if both forwarders are down, we will as well add the OCI default nameserver with its static IP.

resource "oci_core_dhcp_options" "pdns_dhcp_options" {

compartment_id = "${var.oci_compartment_ocid}"

options {

type = "DomainNameServer"

server_type = "CustomDnsServer"

custom_dns_servers = [ "${oci_core_instance.dns_forward_instance_01.private_ip}" , "${oci_core_instance.dns_forward_instance_02.private_ip}", "169.254.169.254" ]

}

options {

type = "SearchDomain"

search_domain_names = [ "${var.private_dns_domain}" ]

}

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

}

resource "oci_core_subnet" "private_subnet" {

cidr_block = "${var.oci_cidr_private_subnet}"

compartment_id = "${var.oci_compartment_ocid}"

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

display_name = "private-subnet"

dns_label = "privsubnet"

dhcp_options_id = "${oci_core_dhcp_options.pdns_dhcp_options.id}"

security_list_ids = ["${oci_core_security_list.private_sl.id}"]

}

resource "oci_core_security_list" "private_sl" {

compartment_id = "${var.oci_compartment_ocid}"

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

display_name = "private-security-list"

ingress_security_rules {

source = "10.0.0.0/16"

protocol = "1"

}

ingress_security_rules {

source = "10.0.0.0/16"

protocol = "6"

tcp_options {

min = "22"

max = "22"

}

}

egress_security_rules {

destination = "0.0.0.0/0"

protocol = "all"

}

}

resource "oci_core_subnet" "public_subnet" {

cidr_block = "${var.oci_cidr_public_subnet}"

compartment_id = "${var.oci_compartment_ocid}"

vcn_id = "${oci_core_virtual_network.pdns_vcn.id}"

display_name = "public-subnet"

dns_label = "pubsubnet"

dhcp_options_id = "${oci_core_dhcp_options.pdns_dhcp_options.id}"

}

The testing hosts now are pretty plain instances, so nothing special here.

resource "oci_core_instance" "pdns_public_instance_01" {

availability_domain = "${lookup(data.oci_identity_availability_domains.pdns_ads.availability_domains[0],"name")}"

compartment_id = "${var.oci_compartment_ocid}"

shape = "VM.Standard2.1"

create_vnic_details {

subnet_id = "${oci_core_subnet.public_subnet.id}"

assign_public_ip = true

skip_source_dest_check = true

}

display_name = "pdns-pub-01"

metadata {

ssh_authorized_keys = "${var.ssh_public_key}"

}

source_details {

source_id = "${var.oci_base_image}"

source_type = "image"

}

preserve_boot_volume = false

}

resource "oci_core_instance" "pdns_private_instance_01" {

availability_domain = "${lookup(data.oci_identity_availability_domains.pdns_ads.availability_domains[1],"name")}"

compartment_id = "${var.oci_compartment_ocid}"

shape = "VM.Standard2.1"

create_vnic_details {

subnet_id = "${oci_core_subnet.private_subnet.id}"

assign_public_ip = false

skip_source_dest_check = true

}

display_name = "pdns-priv-01"

metadata {

ssh_authorized_keys = "${var.ssh_public_key}"

}

source_details {

source_id = "${var.oci_base_image}"

source_type = "image"

}

preserve_boot_volume = false

}

The final thing to do now is adding private DNS records for the 2 testing hosts just created. We give them the prettier names 01.priv.appserver.oci.private and 02.pub.appserver.oci.private. The IP put in RDATA comes from the hosts created in terraform before.

resource "oci_dns_record" "pdns_record_01" {

zone_name_or_id = "${oci_dns_zone.pdns_zone.id}"

domain = "01.priv.appserver.${var.private_dns_domain}"

rtype = "A"

rdata = "${oci_core_instance.pdns_private_instance_01.private_ip}"

ttl = 300

}

resource "oci_dns_record" "pdns_record_02" {

zone_name_or_id = "${oci_dns_zone.pdns_zone.id}"

domain = "02.pub.appserver.${var.private_dns_domain}"

rtype = "A"

rdata = "${oci_core_instance.pdns_public_instance_01.private_ip}"

ttl = 300

}

A quick check of the hosts /etc/resolv.conf shows nicely that the nameservers we configured are taken from DHCP.

Testing the setup

Finally we can take as well a look if resolving names is working as expected. We see that both 01.priv.appserver.oci.private and 02.pub.appserver.oci.private are resolved correctly on our public test host. As well resolving a public DNS entry is working too. So everything working as expected!

A small caveat at the end: Since the DNS forwarders are put in a subnet which does not use DNS forwarders for resolving names, the lookup of private DNS will fail. Public DNS of course works.